Rethinking the role of frames for SE(3)-invariant crystal structure modeling

ICLR 2025

TL;DR To make a GNN invariant to rotations, let's standardize the orientations of local atomic environments represented by internal self-attention weights, instead of directly standardizing the global structure.

Overview

Crystal structure modeling with graph neural networks is essential for various applications in materials informatics, and capturing SE(3)-invariant geometric features is a fundamental requirement for these networks. A straightforward approach is to model with orientation-standardized structures through structure-aligned coordinate systems, or ‟frames.” However, unlike molecules, determining frames for crystal structures is challenging due to their infinite and highly symmetric nature. In particular, existing methods rely on a statically fixed frame for each structure, determined solely by its structural information, regardless of the task under consideration. Here, we rethink the role of frames, questioning whether such simplistic alignment with the structure is sufficient, and propose the concept of dynamic frames. While accommodating the infinite and symmetric nature of crystals, these frames provide each atom with a dynamic view of its local environment, focusing on actively interacting atoms. We demonstrate this concept by utilizing the attention mechanism in a recent transformer-based crystal encoder, resulting in a new architecture called CrystalFramer. Extensive experiments show that CrystalFramer outperforms conventional frames and existing crystal encoders in various crystal property prediction tasks.

Problem

Crystal structure

Crystal structures are periodic arrangements of atoms in 3D space, serving as the source codes for diverse materials, such as permanent magnets, battery materials, and superconductors.

Crystal structure in 2D space

A crystal structure is typically described by its repeatable 3D slice called a unit cell. We assume a unit cell consisting of atoms and denote it as :

- : the species (atomic numbers) of unit cell atoms.

- : the 3D Cartesian coordinates of unit cell atoms.

- : lattice vectors that define periodic unit-cell translations in 3D space.

By tiling the unit cell to fill 3D space, the species and positions of atoms in the crystal structure are determined as follows.

Here, we use to denote the -th atom in the unit cell, and use to denote its duplicate by the 3D translation: . We use and similarly.

SE(3)-invariant structural modeling

We consider the problem of estimating the physical state of a given crystal structure, assuming that the state remains invariant under rigid transformations (i.e., rotations and translations). Such a state typically corresponds to material properties, such as formation energy and bandgap.

We represent the state of a crystal structure by a set of abstract atom-wise state features for the unit-cell atoms:

As input to a graph neural network (GNN), these features are usually initialized via atom embeddings:

which only symbolically represent atomic species. They are then evolved through message-passing layers

to eventually reflect the atomic states appropriate for a target task.

Challenges in SE(3)-invariant GNNs

There are several approaches to ensuring SE(3) invariance in GNNs:

- Invariant features: Leveraging inherently invariant geometric features, such as interatomic distances and angles between triplets , ensures SE(3) invariance. However, fully distance-based models have limited expressive power, and incorporating three-body interactions significantly increases computational complexity.

- Frames: Another straightforward approach is to standardize the orientation of a given structure through a structure-aligned coordinate system called a frame. However, determining frames for crystal structures is challenging due to their infinite and highly symmetric nature.

We explore a new frame-based methodology to incorporate richer yet invariant structural information beyond distances.

Ideas

What is the role of frames?

Surely, it is to standardize the orientations of given structures so that GNN models can directly exploit 3D coordinate information as invariant geometric features.

── Is that all?

Let’s dig deeper into how frames work in a GNN, whose message-passing layers are assumed to include the following general operation:

This equation describes that the state of each unit-cell atom is updated by receiving abstract influences or ‟messages”, , from atoms in the structure, weighted by scalars . In recent transformer models, these weights are determined dynamically via self-attention mechanisms.

Distance-based GNNs ensure SE(3) invariance by simply formulating with the interatomic distance, , as follows:

The role of frames is to offer, for the design of the message function , more informative invariant features beyond the distance through a structure-aligned coordinate system , as follows:

where the frame-projected relative position remains invariant under global rotations and translations for the crystal structure .

Dynamic frames

Given that the end-users of frames are the message functions in GNNs, shouldn't we tailor a frame for each message function in each layer so that the function receives a better-normalized structure?

── We pursue this idea by introducing the concept of dynamic frames.

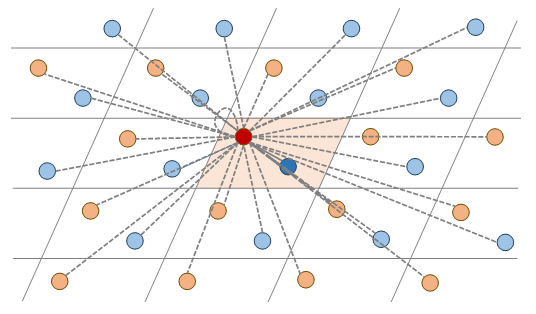

In each message passing layer, the target atom receives more influences from atoms with larger weights , and no influence from atoms with zero weights. This means that, when updating the state of atom , this atom has its own partial and local view of the structure through weights acting as a mask on the structure.

Dynamic frame in 2D space

As a dynamic frame, we therefore construct an atom-wise frame for each target atom by using this masked view of the structure with weights , as follows:

Typically, we define an orthonormal basis as a frame, where the first and second axes point towards the primary and secondary influential directions of interatomic interactions. (See the paper for detailed definitions.)

This dynamic frame is then used to project the relative position vectors in order to derive the messages for the target atom , as follows:

Importantly, our dynamic frames are constructed with the entire structure , rather than with a specific unit-cell representation . Thus, our dynamic frames are invariant under the unit-cell variations within the same crystal structure.

CrystalFramer

We demonstrate the proposed concept of dynamic frames by utilizing the Crystalformer architecture (Taniai et al., ICLR 2024). Crystalformer employs the standard softmax self-attention for message passing, which is formulated as infinitely connected distance-decay attention as follows:

Here, query , key , and value are linear projections of the current state . Scalar is the normalizer of softmax attention weights. Vector is a geometric relative position encoding for atoms and .

Originally, simply encodes the scalar distance via a linear projection of Gaussian basis functions (GBFs). In this work, we enhance the model's expressive power by incorporating frame-based geometric features into the Crystalformer's relative position encoding . This results in a new architecture CrystalFramer.

Frame-based invariant features

Given the unit direction vector , we obtain its invariant representation , where the -th component represents the cosine of the angle between the -th axis and the direction:

Using GBFs as a mapping from a scalar to a vector, we linearly combine the distance-based and three angle-based edge features, as follows:

This as a whole essentially encodes the 3D relative position vector, .

Architecture

Below is the architecture of CrystalFramer, where we have introduced dynamic frame construction and frame-based edge features, as highlighted in the figure.

CrystalFramer architecture

Given the multi-head self-attention mechanism, we dynamically construct a frame for each target atom, head, and layer during the self-attention operation.

Property Prediction Benchmarks

We evaluated the performance of CrystalFramer using two types of dynamic frames: weighted PCA frames and max frames. We compared these with existing crystal frames (PCA frames and lattice frames) and other state-of-the-art crystal encoders. For evaluation, we used three datasets: JARVIS (55,723 materials), Materials Project (69,239 materials), and OQMD (817,636 materials).

JARVIS dataset

| E form | E total | BG (OPT) | BG (MBJ) | E hull | |

|---|---|---|---|---|---|

| CGCNN (Xie & Grossman, 2018) | 0.063 | 0.078 | 0.20 | 0.41 | 0.17 |

| SchNet (Schütt et al., 2018) | 0.045 | 0.047 | 0.19 | 0.43 | 0.14 |

| MEGNet (Chen et al. 2019) | 0.047 | 0.058 | 0.145 | 0.34 | 0.084 |

| GATGNN (Louis et al., 2020) | 0.047 | 0.056 | 0.17 | 0.51 | 0.12 |

| M3GNet (Chen et al., 2022) | 0.039 | 0.041 | 0.145 | 0.362 | 0.095 |

| ALIGNN (Choudhary et al., 2021) | 0.0331 | 0.037 | 0.142 | 0.31 | 0.076 |

| Matformer (Yan et al., 2022) | 0.0325 | 0.035 | 0.137 | 0.30 | 0.064 |

| PotNet (Lin et al., 2023) | 0.0294 | 0.032 | 0.127 | 0.27 | 0.055 |

| eComFormer (Yan et al., 2024) | 0.0284 | 0.032 | 0.124 | 0.28 | 0.044 |

| iComFormer (Yan et al., 2024) | 0.0272 | 0.0288 | 0.122 | 0.26 | 0.047 |

| Crystalformer (Taniai et al., 2024) | 0.0306 | 0.0320 | 0.128 | 0.274 | 0.0463 |

| ─ w/ PCA frames (Duval et al., 2023) | 0.0325 | 0.0334 | 0.144 | 0.292 | 0.0568 |

| ─ w/ lattice frames (Yan et al., 2024) | 0.0302 | 0.0323 | 0.125 | 0.274 | 0.0531 |

| ─ w/ static local frames | 0.0285 | 0.0292 | 0.122 | 0.261 | 0.0444 |

| ─ w/ weighted PCA frames (proposed) | 0.0287 | 0.0305 | 0.126 | 0.279 | 0.0444 |

| ─ w/ max frames (proposed) | 0.0263 | 0.0279 | 0.117 | 0.242 | 0.0471 |

Materials Project dataset

| E form | BG | Bulk modulus | Shear modulus | |

|---|---|---|---|---|

| CGCNN (Xie & Grossman, 2018) | 0.031 | 0.292 | 0.047 | 0.077 |

| SchNet (Schütt et al., 2018) | 0.033 | 0.345 | 0.066 | 0.099 |

| MEGNet (Chen et al. 2019) | 0.030 | 0.307 | 0.060 | 0.099 |

| GATGNN (Louis et al., 2020) | 0.033 | 0.280 | 0.045 | 0.075 |

| M3GNet (Chen et al., 2022) | 0.024 | 0.247 | 0.050 | 0.087 |

| ALIGNN (Choudhary et al., 2021) | 0.022 | 0.218 | 0.051 | 0.078 |

| Matformer (Yan et al., 2022) | 0.021 | 0.211 | 0.043 | 0.073 |

| PotNet (Lin et al., 2023) | 0.0188 | 0.204 | 0.040 | 0.065 |

| eComFormer (Yan et al., 2024) | 0.0182 | 0.202 | 0.0417 | 0.0729 |

| iComFormer (Yan et al., 2024) | 0.0183 | 0.193 | 0.0380 | 0.0637 |

| Crystalformer (Taniai et al., 2024) | 0.0186 | 0.198 | 0.0377 | 0.0689 |

| ─ w/ PCA frames (Duval et al., 2023) | 0.0197 | 0.217 | 0.0424 | 0.0719 |

| ─ w/ lattice frames (Yan et al., 2024) | 0.0194 | 0.212 | 0.0389 | 0.0720 |

| ─ w/ static local frames | 0.0178 | 0.191 | 0.0354 | 0.0708 |

| ─ w/ weighted PCA frames (proposed) | 0.0197 | 0.214 | 0.0423 | 0.0715 |

| ─ w/ max frames (proposed) | 0.0172 | 0.185 | 0.0338 | 0.0677 |

OQMD dataset

| # Blocks | E form | BG | E hull | |

|---|---|---|---|---|

| Crystalformer (baseline) | 4 | 0.02115 | 0.06028 | 0.06759 |

| CrystalFramer (max frames) | 4 | 0.01871 | 0.05805 | 0.06607 |

| Crystalformer (baseline) | 8 | 0.02104 | 0.05986 | 0.06690 |

| CrystalFramer (max frames) | 8 | 0.01778 | 0.05785 | 0.06454 |

Overall, CrystalFramer significantly improves the baseline performance of CrystalFormer and outperforms most existing methods across various tasks and datasets.

Visual Analysis

Max frames capture local motiffs around the target atom, while weighted PCA frames look at the structure over broader areas. Both types of frames tend to focus on close neighbors in shallow layers and relatively distant neighbors in deeper layers.

Contact

Citation

@inproceedings{ito2025crystalframer,

title = {Rethinking the role of frames for SE(3)-invariant crystal structure modeling},

author = {Yusei Ito and

Tatsunori Taniai and

Ryo Igarashi and

Yoshitaka Ushiku and

Kanta Ono},

booktitle = {The Thirteenth International Conference on Learning Representations (ICLR 2025)},

year = {2025},

url = {https://openreview.net/forum?id=gzxDjnvBDa}

}