Multi-Agent Behavior Retrieval: Retrieval-Augmented Policy Training for Cooperative Manipulation by Mobile Robots

IROS2024

TL;DR introduces the Multi-Agent Coordination Skill Database, allowing multiple mobile robots to efficiently use past memories to adapt to new tasks.

Overview

Due to the complex interactions between agents, learning multi-agent control policy often requires a prohibitive amount of data. This paper aims to enable multi-agent systems to effectively utilize past memories to adapt to novel collaborative tasks in a data-efficient fashion. We propose the Multi-Agent Coordination Skill Database, a repository for storing a collection of coordinated behaviors associated with key vectors distinctive to them. Our Transformer-based skill encoder effectively captures spatio-temporal interactions that contribute to coordination and provides a unique skill representation for each coordinated behavior. By leveraging only a small number of demonstrations of the target task, the database enables us to train the policy using a dataset augmented with the retrieved demonstrations. Experimental evaluations demonstrate that our method achieves a significantly higher success rate in push manipulation tasks compared with baseline methods like few-shot imitation learning. Furthermore, we validate the effectiveness of our retrieve-and-learn framework in a real environment using a team of wheeled robots.

Video

Multi-Agent Behavior Retrieval

Retrieve-and-Learn Framework

Given a large task-agnostic prior dataset and a few demonstrations collected from the target task, our main objective is to retrieve coordination skills from that facilitate downstream policy learning for the target task.

Our retrieve-and-learn framework consists of three primary components: (i) Database Construction, (ii) Coordination Skill Retrieval, and (iii) Retrieval-Augmented Policy Training.

Multi-Agent Coordination Skill Database

consists of task-agnostic demonstrations of mobile agents. To effectively retrieve demonstrations from that are relevant to , we seek an abstract representation to measure the similarity between the two multi-agent demonstrations. We refer to this as multi-agent coordination skill representation, a compressed vector representation that is distinctive to the specific coordination behavior. For agents’ states , we aim to learn a skill encoder that maps the state representation of multiple agents into a single representative vector .

For this, we introduce a Transformer-based coordination skill encoder, which learns to capture interactions among agents as well as interactions between agents and a manipulation object.

Retrieval-Augmented Policy Training

We assume that the prior dataset and the target demonstrations are composed as follows,

| The prior dataset encompasses a large-scale offline demonstration of diverse cooperative tasks. The dataset also includes noisy or sub-optimal demonstrations to mimic real-world scenarios. All demonstrations are task-agnostic, i.e., each data is stored without any specific task annotations. | |

| The target dataset encompasses a small amount of expert data, i.e., all data is composed of wellcoordinated demonstrations to complete the target task. |

Given and , we aim to retrieve cooperative behaviors similar to those seen in in the learned coordination skill space. Once we obtain the retrieved data , we train a multi-agent control policy using augmented with . That is, the training data is described as .

Results

Quantitative Results on Simulated Demonstrations

We assume mobile robots are navigated to push an object toward a predefined goal state. To explore various coordination scenarios, we specifically focus on three different values of . For each of these setups, we collect demonstrations that involve four distinct tasks. These four tasks are carefully designed to encompass two different objects (stick or block) and manipulation difficulties (easy or hard).

| Num of Agents | task | success rate ⬆️ [%] | |||

|---|---|---|---|---|---|

| object | level | trajectory matching | few-shot imitation learning | ✨ours✨ | |

| 2 | 🧱block 🪄stick | hard easy hard easy | 41.4±5.9 55.7±6.0 32.9±5.7 68.6±5.6 | 20.0±4.8 15.7±4.4 5.7±2.8 12.9±4.0 | 41.4±5.9 57.1±6.0 35.7±5.8 67.1±5.7 |

| 3 | 🧱block 🪄stick | hard easy hard easy | 48.6±6.0 77.1±5.1 28.6±5.4 67.1±5.7 | 32.9±5.7 34.3±5.7 34.3±5.7 52.9±6.0 | 42.9±6.0 90.0±3.6 41.4±5.9 65.7±5.7 |

| 4 | 🧱block 🪄stick | hard easy hard easy | 55.7±6.0 78.6±4.9 18.6±4.7 52.9±6.0 | 38.6±5.9 55.7±6.0 7.10±3.1 57.1±6.0 | 58.6±5.9 88.6±3.8 24.3±5.2 70.0±5.5 |

| baselines | |

|---|---|

| TRAJECTORY MATCHING | We utilize a standard trajectory matching as a baseline to evaluate our retrieval method on the basis of multi-agent skill representation. By calculating the similarity of the robot’s -coordinate trajectories using FastDTW, we retrieve data from the that have trajectories similar to the target data’s trajectories. |

| FEW-SHOT IMITATION LEARNING | We compare our method with a few-shot adaptation method. We trained the multi-task policy from and fine-tuned it using . |

The Table shows our retrieval-augmented policy training outperforms few-shot imitation learning and agent-wise trajectory matching. These results indicate that our approach is more effective in tasks requiring advanced robot coordination, particularly in scenarios with a larger number of robots or in more complex tasks.

Real-robot Experiments

To validate the efficacy of our method in the real world, we use demonstrations of real wheeled robots for querying the prior dataset constructed in simulated environments. We then train the policy using a few real-robot data augmented with the retrieved simulation demonstrations.

We compare our policy trained using the real and retrieved demonstrations and the one trained using only the real-robot demonstrations. The results clearly demonstrate that the policy trained by our retrieve-and-learn framework successfully pushes an object closer to the goal state, while the policy trained using only a few real demonstrations (No Retrieved Data) fails to complete the task.

Acknowledgements

This work was supported by JST AIP Acceleration Research JPMJCR23U2, Japan.

Contact

Citation

@inproceedings{kuroki2024iros,

title={Multi-Agent Behavior Retrieval: Retrieval-Augmented Policy Training for Cooperative Push Manipulation by Mobile Robots},

author={So Kuroki, Mai Nishiura, Tadashi Kozuno},

booktitle={2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

organization={IEEE}

year={2024}

}

Relevant Projects

maru: a miniature-sized wheeled robot for swarm robotics research

"maru" (= miniature assemblage adaptive robot unit) is a custom-made, miniature-sized, two-wheeled robot designed specifically for tabletop swarm robotics research.

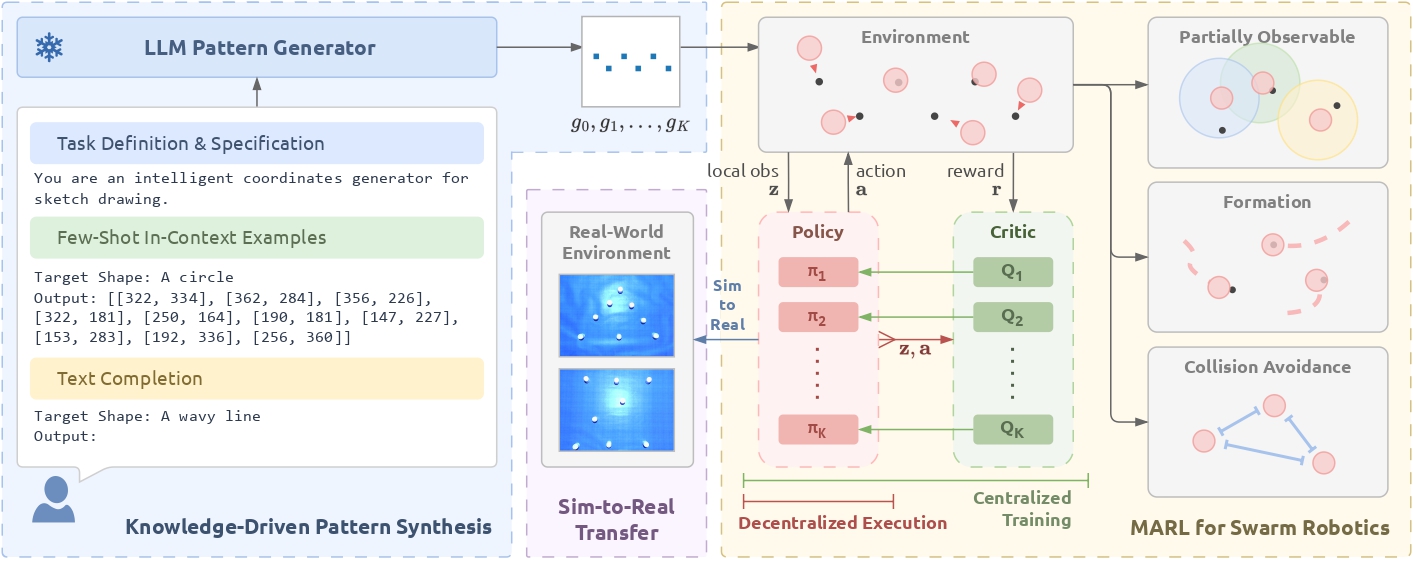

Language-Guided Pattern Formation for Swarm Robotics with Multi-Agent Reinforcement Learning

This paper explores how to leverage the vast knowledge encoded in large language models to tackle pattern formation challenges for swarm robotics systems.